“Explainable AI is crucial to ensure trust and transparency in AI applications, enabling humans to understand and evaluate the decisions made by machines.”

This quote was said by IBM Watson, a provider of AI technologies and solutions.

Table of Contents

1. Introduction on Explainable AI

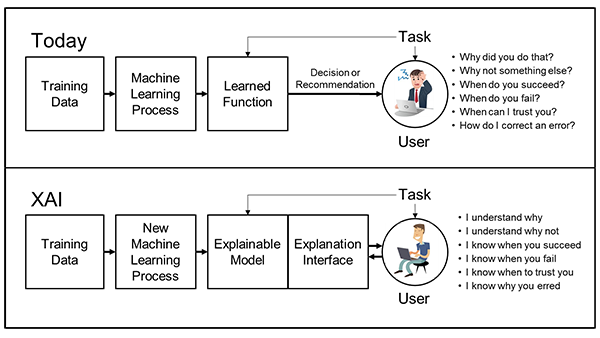

Dramatic success in machine learning has led to a spurt of Artificial Intelligence (AI) applications. Continued advances promise to produce autonomous systems that will perceive, learn, decide, and act on their own.

However, the effectiveness of these systems is limited by the machine’s current inability to explain their decisions and actions to human users.as AI becomes more advanced, it also becomes increasingly complex and difficult to understand.

The lack of transparency and interpretability of AI models can raise concerns about ethical, legal, and social implications. To address these concerns, Explainable AI (XAI) has emerged as a crucial area of research in AI. In this context, explainability refers to the ability of AI systems to provide understandable and justifiable explanations for their decisions or predictions.

This has significant implications for AI ethics, accountability, and trust, and can also help to improve the performance and usability of AI systems.

2. Importance of Transparency and Accountability in AI

Transparency and accountability are crucial aspects of any technology, especially when it comes to artificial intelligence (AI). As AI is becoming increasingly complex and ubiquitous, it is becoming harder to understand how AI systems make decisions. This lack of transparency can raise ethical, legal, and social concerns about the use of AI.

Additionally, it is difficult to hold AI systems accountable for their actions if their decision-making process is not transparent. This lack of accountability can lead to unintended consequences, bias, and potential harm to individuals or society.

Therefore, it is essential to ensure that AI systems are transparent and accountable, and this can be achieved through Explainable AI (XAI) techniques. XAI provides understandable and justifiable explanations for AI decisions, enabling humans to better understand and trust AI systems. This can enhance the ethical and responsible development and deployment of AI systems and foster public trust in AI technology.

3. Objective of Explainable AI

The Explainable AI aims to create machine learning techniques that:

- Produce more explainable models, while maintaining a high level of learning performance.

- Enable human users to understand, appropriately trust, and effectively manage the emerging generation of artificially intelligent partners.

New machine-learning systems will have the ability to explain their rationale, characterize their strengths and weaknesses, and convey an understanding of how they will behave in the future.

4. Techniques Used in Explainable AI?

Explainable AI (XAI) uses various techniques to provide understandable and justifiable explanations for AI decisions. Some of the commonly used techniques include:

- Rule-based systems: This technique uses a set of explicit rules to determine the output of an AI model.

- Decision trees: Decision trees use a hierarchical structure of rules and conditions to make decisions, which can help in providing interpretable explanations for AI decisions.

- Neural network visualization: This technique uses visual representations of the decision-making process to provide a better understanding of the relationship between inputs and outputs of an AI model.

- Prototypes and counterfactuals: This technique generates examples that are similar or different from the original input to explain the decision of an AI model.

- Local interpretable model-agnostic explanations (LIME): LIME uses a simple model to explain the predictions of a complex AI model by generating local explanations around a specific input.

These techniques are not exhaustive, and researchers continue to develop new methods to improve XAI. By using these techniques, XAI can help improve the transparency and accountability of AI systems and enhance human trust in AI technology.

5. How explainable AI works with above techniques?

Explainable AI (XAI) works with the help of various techniques to provide understandable and justifiable explanations for AI decisions.

Rule-based systems use explicit rules to determine the output of an AI model, and these rules can be interpreted by humans to understand the reasoning behind an AI decision.

Decision trees use a hierarchical structure of rules and conditions to make decisions, which can help in providing interpretable explanations for AI decisions. This can make it easier for humans to understand the decision-making process of an AI model.

Neural network visualization techniques use visual representations of the decision-making process to provide a better understanding of the relationship between inputs and outputs of an AI model. This can help humans to understand how the model arrived at a particular decision.

Prototypes and counterfactuals generate examples that are similar or different from the original input to explain the decision of an AI model. This can help humans to understand the model’s behavior in different scenarios.

Local interpretable model-agnostic explanations (LIME) use a simple model to explain the predictions of a complex AI model by generating local explanations around a specific input. This can help humans to understand the decision-making process of a complex AI model in a simplified manner.

By using these techniques, XAI can improve the transparency and accountability of AI systems, enabling humans to better understand and trust AI technology.

6. Benefits of Explainable AI for Businesses and Consumers

Explainable AI (XAI) has several benefits for both businesses and consumers.

Benefits of XAI for businesses:

- Improved decision-making: XAI can provide businesses with understandable and justifiable explanations for AI decisions, which can help them make more informed and accurate decisions.

- Increased trust: XAI can help businesses build trust with their customers by providing transparent and accountable AI systems, which can help increase customer loyalty.

- Compliance with regulations: XAI can help businesses comply with regulatory requirements related to transparency and accountability of AI systems.

- Better risk management: XAI can help businesses identify potential risks and biases in AI models, enabling them to make more informed decisions to mitigate these risks.

Benefits of XAI for consumers:

- Better understanding: XAI can help consumers understand the decision-making process of AI models, enabling them to make more informed choices.

- Increased trust: XAI can help consumers trust AI technology more, knowing that the decisions made by AI models are transparent and accountable.

- Fair treatment: XAI can help prevent biased AI models, ensuring that consumers are treated fairly and equitably.

- Improved privacy: XAI can help protect consumer privacy by providing greater transparency about the data used by AI models and how it is used.

Overall, XAI can help promote the responsible and ethical use of AI technology, benefiting both businesses and consumers.

7. Real-World Applications of Explainable AI

Explainable AI (XAI) has several real-world applications across various industries. Here are some examples:

- Healthcare: XAI can help healthcare providers diagnose and treat patients by providing interpretable explanations for AI models that analyze medical images or electronic health records. This can improve patient outcomes and reduce medical errors.

- Finance: XAI can help financial institutions make more informed decisions by providing transparent and accountable AI models for fraud detection, credit risk assessment, and portfolio management.

- Autonomous vehicles: XAI can help improve the safety and reliability of autonomous vehicles by providing interpretable explanations for AI models that control vehicle functions.

- Customer service: XAI can help improve the customer experience by providing interpretable explanations for AI models used in chatbots and virtual assistants, enabling customers to understand the reasoning behind responses.

- Natural language processing: XAI can help improve the accuracy and interpretability of AI models used for natural language processing, enabling better understanding of language and communication.

- Manufacturing: XAI can help improve the efficiency and quality of manufacturing processes by providing interpretable explanations for AI models used in predictive maintenance and quality control.

Overall, XAI can help improve the transparency, accountability, and trustworthiness of AI models, enabling their responsible and ethical use in various industries.

8. Potential Challenges and Limitations of Explainable AI

While Explainable AI (XAI) has several benefits, there are also potential challenges and limitations to its implementation. Here are some examples:

- Complexity: Some AI models are highly complex and may be difficult to explain in a way that is understandable to humans.

- Trade-off between accuracy and interpretability: In some cases, AI models that are highly accurate may be less interpretable, and vice versa. Finding the right balance between accuracy and interpretability can be challenging.

- Data availability: XAI relies on the availability of large, high-quality datasets to provide interpretable explanations. However, such datasets may not always be available, especially for new or niche applications.

- Bias and fairness: While XAI can help identify and mitigate bias in AI models, it may not be able to eliminate bias completely. Ensuring fairness in AI systems remains a challenge.

- Performance: Some XAI techniques can be computationally expensive, which can affect the performance of AI models in real-time applications.

- Lack of standardization: There is currently a lack of standardization in XAI techniques, which can make it difficult to compare and evaluate different AI models.

- Privacy concerns: Providing interpretable explanations for AI models may involve revealing sensitive information about individuals or organizations, which can raise privacy concerns.

Overall, addressing these challenges and limitations is crucial for the successful implementation and adoption of XAI, and ongoing research and development in this field is necessary.

9. Future Outlook and Advancements in Explainable AI

Explainable AI (XAI) is a rapidly evolving field, and there are several advancements and future outlooks to look out for. Here are some examples:

- Interdisciplinary research: XAI requires expertise in several fields, including computer science, psychology, and philosophy. Interdisciplinary research can help advance the development and adoption of XAI.

- Explainability in deep learning: Deep learning models are often highly complex and difficult to explain. Advancements in techniques for explainability in deep learning can help make these models more transparent and accountable.

- Standardization: Standardization in XAI techniques can help improve comparability and evaluation of different AI models, making it easier for organizations to adopt and implement XAI.

- Human-centered design: Incorporating human-centered design principles in the development of XAI systems can help ensure that they are usable, trustworthy, and ethical.

- Ethics and governance: XAI raises several ethical and governance considerations, including issues related to fairness, bias, privacy, and accountability. Ongoing research and development in these areas can help address these concerns.

- Advancements in natural language processing: Natural language processing is a key application area for XAI. Advancements in techniques for explainability in natural language processing can help improve the accuracy and interpretability of AI models used in this field.

Overall, advancements in XAI will continue to drive the responsible and ethical use of AI technology, making it more transparent, accountable, and trustworthy for both businesses and consumers.

10. Conclusion: Embracing Explainable AI for Ethical and Sustainable AI Development.

Explainable AI (XAI) is a critical aspect of ethical and sustainable AI development. XAI provides transparent and accountable AI models that are interpretable to humans, enabling us to understand how they make decisions and take actions. The benefits of XAI are manifold, including increased trust in AI systems, improved decision-making, and reduced bias.

As AI continues to advance and become more pervasive across industries, it is crucial to ensure that it is developed in an ethical and sustainable manner. Embracing XAI can help ensure that AI is used responsibly and ethically, enabling its safe and beneficial integration into our lives.

However, the implementation of XAI also presents several challenges and limitations, including complexity, trade-offs between accuracy and interpretability, and privacy concerns. Addressing these challenges and limitations requires ongoing research and development in the field.

In conclusion, embracing XAI is essential for the responsible and ethical development of AI technology. By prioritizing transparency and accountability in AI models, we can ensure that AI technology is used for the greater good, advancing progress and promoting human welfare.

11. Glossary

- Explainable AI (XAI): A field of research and development in artificial intelligence that focuses on creating models and systems that are transparent, interpretable, and accountable.

- Transparency: The quality of being open and easily understandable, allowing for clear insights into the decision-making processes of AI models.

- Accountability: The responsibility of an AI model to be transparent about its decision-making process and actions, and to be held responsible for any unintended consequences.

- Machine learning: A type of artificial intelligence that allows systems to automatically learn and improve from experience, without being explicitly programmed.

- Natural language processing (NLP): A subfield of AI that focuses on enabling computers to understand, interpret, and generate human language.

- Model-agnostic techniques: XAI techniques that are not specific to any particular AI model, and can be applied to a wide range of models.

- Black-box models: AI models that are highly complex and difficult to understand or interpret.

- Counterfactual explanations: Explanations that show how an AI model would have made a different decision if certain inputs or circumstances had been different.

- Interdisciplinary research: Research that involves multiple fields of study, such as computer science, psychology, and philosophy, to advance the development and adoption of XAI.

- Human-centered design: A design approach that prioritizes the needs, experiences, and perspectives of humans, ensuring that AI systems are usable, trustworthy, and ethical.

- Ethics and governance: The ethical and governance considerations that arise from the development and use of AI systems, including issues related to fairness, bias, privacy, and accountability.

- Standardization: The process of developing and implementing standards, guidelines, and best practices for the development and evaluation of AI models.

- Bias: Systematic and unfair distortion or discrimination in decision-making, often based on characteristics such as race, gender, or age.

- Fairness: The principle of treating individuals and groups equally and without bias, often measured through metrics such as statistical parity or disparate impact.

- Privacy: The protection of personal information from unauthorized access, use, or disclosure.

- Interpretability: The ability of an AI model to be understood and interpreted by humans, often through the use of visualizations, explanations, or other techniques.

- Trust: The confidence and reliance placed on an AI system by users or stakeholders, often influenced by factors such as transparency, accuracy, and fairness.

- Explainability techniques: Methods or tools used to increase the transparency and interpretability of AI models, such as LIME, SHAP, or decision trees.

- Adversarial attacks: Deliberate attempts to deceive or manipulate an AI system by inputting malicious or misleading data, often used to evaluate the robustness of AI models.

- Model selection: The process of choosing the most appropriate AI model for a given task or application, often based on criteria such as accuracy, interpretability, or computational efficiency.

12. References

“Explainable Artificial Intelligence (XAI) Overview” by Defense Advanced Research Projects Agency (DARPA): https://www.darpa.mil/program/explainable-artificial-intelligence

“An Introduction to Explainable AI and its Applications” by IBM Watson: https://www.ibm.com/cloud/learn/explainable-ai

13. Related Topics

https://amateurs.co.in/every-thing-about-machine-learning/

https://amateurs.co.in/the-rise-of-artificial-intelligence/

https://amateurs.co.in/what-is-natural-language-processing-nlp/